|

I am currently an associate professor at National University of Defense Technology (NUDT). Before joining NUDT, I reveived my PhD degree at Shanghai Jiao Tong University (SJTU) and National University of Singapore (NUS) in 2024, under the supervision of Prof. Ya Zhang and Assistant Prof. Xinchao Wang. I received my bachelor's degree from SJTU in 2019, during which I worked as a research assistant at MVIG under the guidance of Prof. Cewu Lu. I am currently focused on anomaly detection and computer vision. CV / Email / Google Scholar / Github / Chinese Homepage (中文主页)

|

|

Awards |

Services |

|

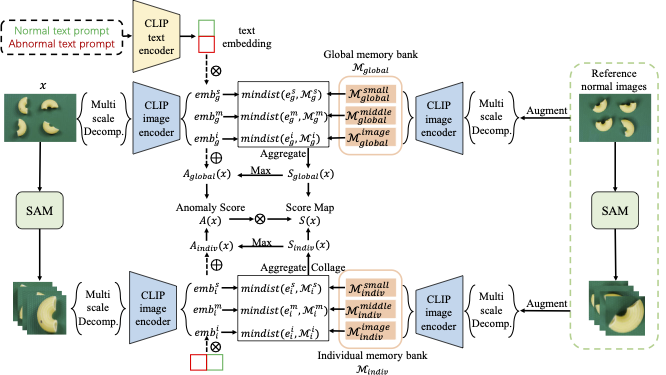

Chaoqin Huang*, Aofan Jiang*, Ya Zhang, Yanfeng Wang (* Equal Contribution) VAND Runner-up Winner in CVPR 2023 The approach employs a global memory bank to capture features across the entire image, while an individual memory bank focuses on simplified scenes containing a single object. The efficacy of our method is validated by its remarkable achievement of 4th place in the zero-shot track and 2nd place in the few-shot track of the Visual Anomaly and Novelty Detection (VAND) competition. |

|

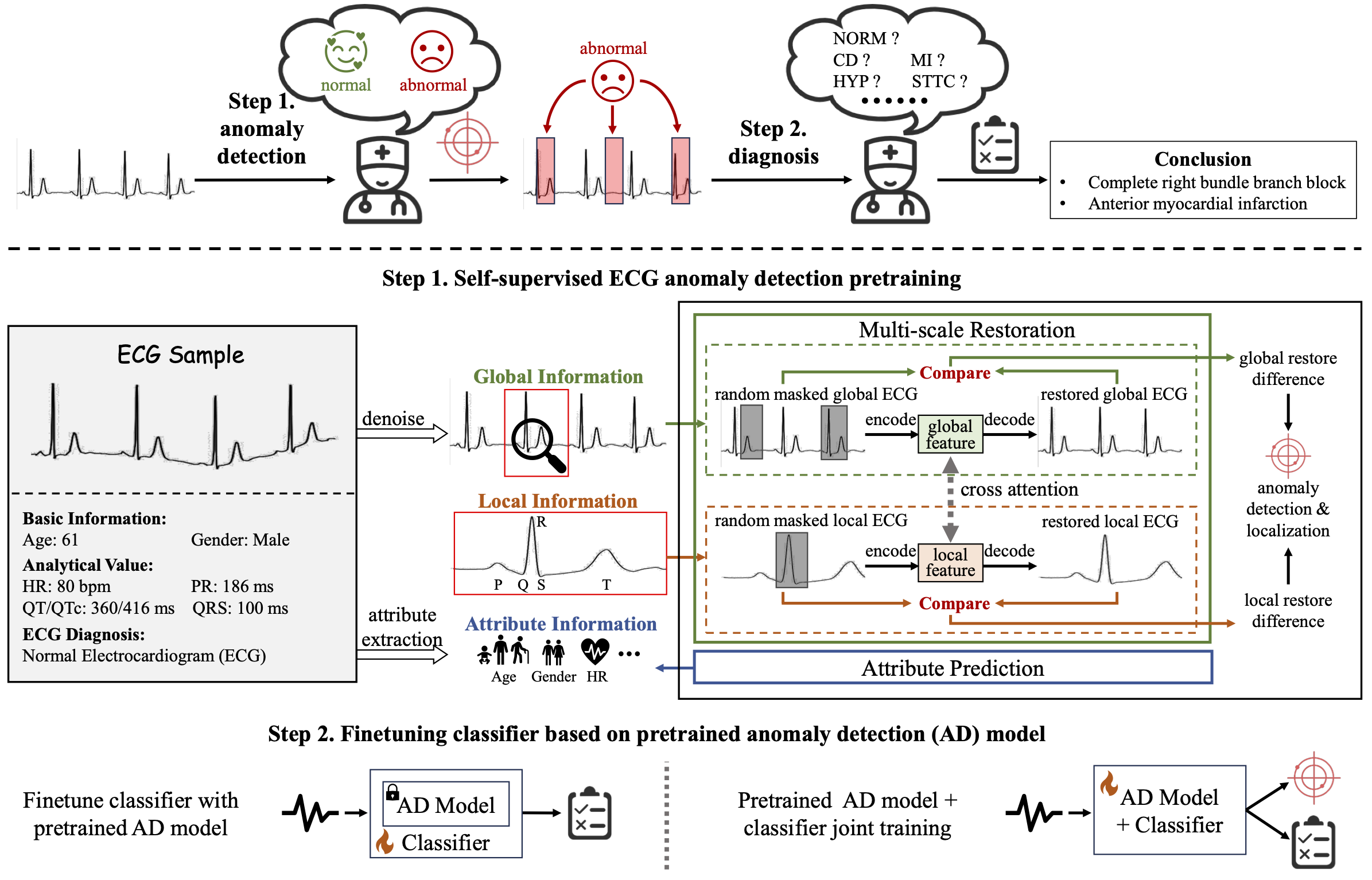

Aofan Jiang*, Chaoqin Huang*, Qing Cao, Yuchen Xu, Zi Zeng, Kang Chen, Ya Zhang, Yanfeng Wang (* Equal Contribution) Nature Communications (under review) Current computer-aided ECG diagnostic systems struggle with the underdetection of rare but critical cardiac anomalies due to the imbalanced nature of ECG datasets. This study introduces a novel approach using self-supervised anomaly detection pretraining to address this limitation. Validated on an extensive dataset of over one million ECG records from clinical practice, characterized by a long-tail distribution across 116 distinct categories, the anomaly detection-pretrained ECG diagnostic model has demonstrated a significant improvement in overall accuracy. Notably, our approach yielded a 94.7% AUROC, 92.2% sensitivity, and 92.5% specificity for rare ECG types. Prospective validation in real-world clinical settings revealed that our AI-driven approach enhances diagnostic efficiency, precision, and completeness by 32%, 6.7%, and 11.8% respectively, when compared to standard practices. |

|

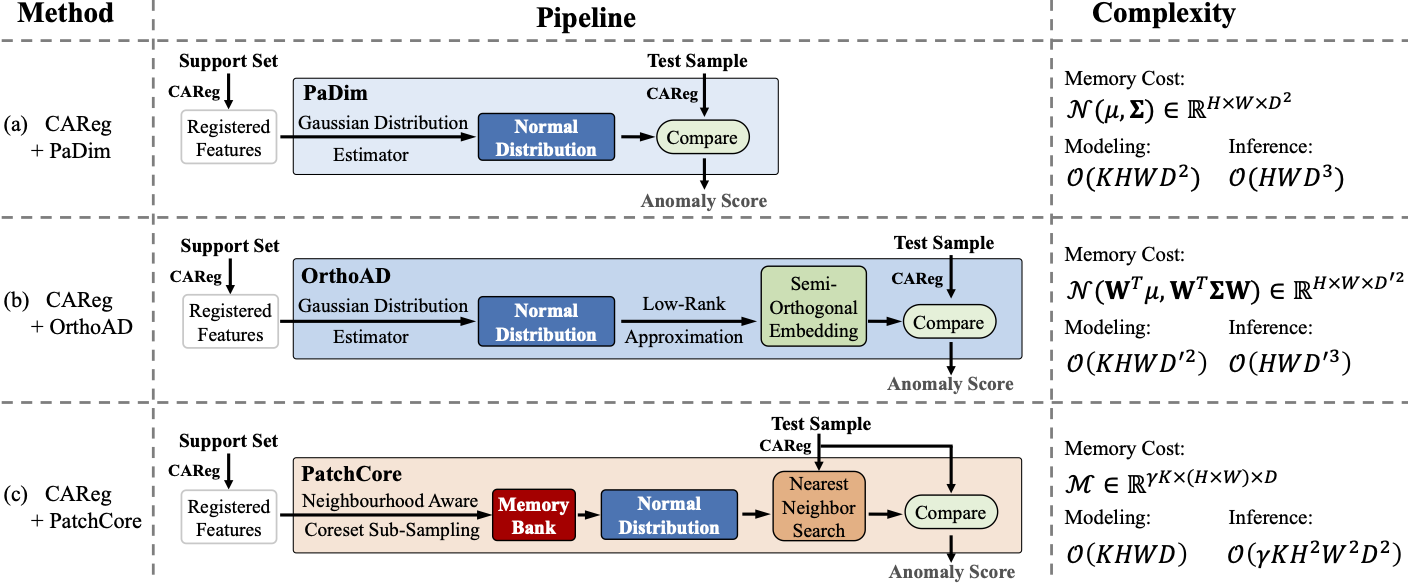

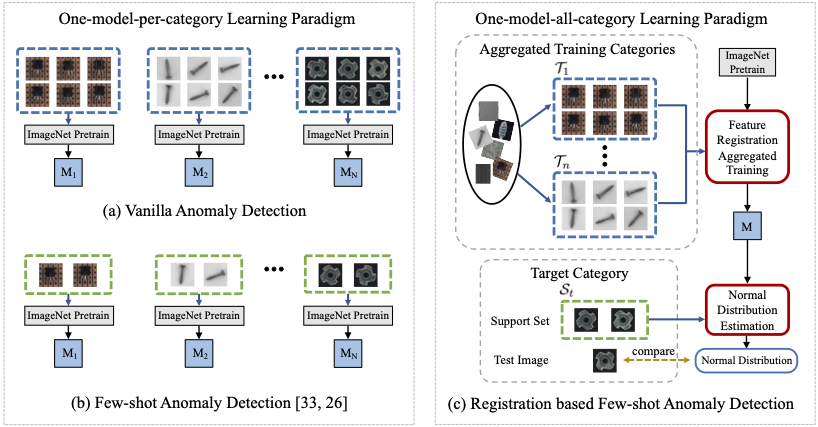

Chaoqin Huang, Haoyan Guan, Aofan Jiang, Yanfeng Wang, Michael Spratling, Xinchao Wang, Ya Zhang IEEE Transactions on Neural Networks and Learning Systems (TNNLS) 2024 Existing anomaly detection methods require a dedicated model for each category. Such a paradigm is computationally expensive and inefficient. This paper proposes the first FSAD method that requires no model fine-tuning for novel categories: enabling a single model to be applied to all categories. It improves the current state-of-the-art for FSAD by 11.3% and 8.3% on the MVTec and MPDD benchmarks, respectively. |

|

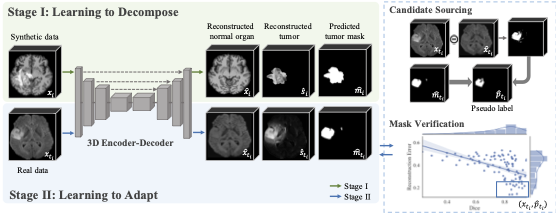

Xiaoman Zhang, Weidi Xie, Chaoqin Huang, Ya Zhang, Xin Chen, Qi Tian, Yanfeng Wang IEEE Journal of Biomedical and Health Informatics (JBHI) 2023 We proposed a two-stage Sim2Real training regime for unsupervised tumor segmentation, where we first pre-train a model with simulated tumors and then adopt a self-training strategy for downstream data adaptation. When evaluating on BraTS2018 for brain tumor segmentation and LiTS2017 for liver tumor segmentation, we achieve state-of-the-art segmentation performance under the unsupervised setting. |

|

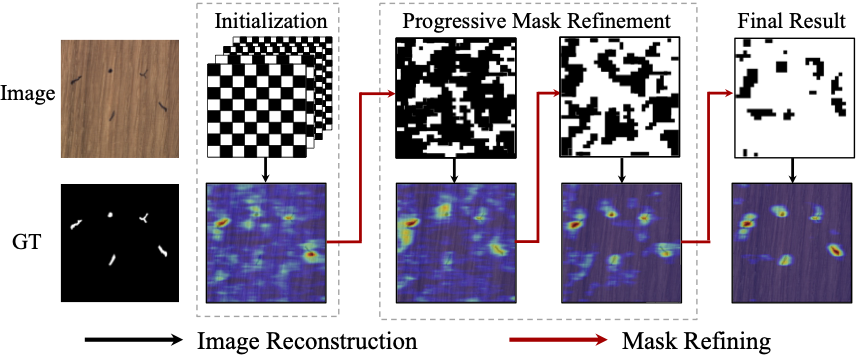

Chaoqin Huang, Qinwei Xu, Yanfeng Wang , Yu Wang, Ya Zhang IEEE Transactions on Multimedia (TMM) 2022 We proposed a self-supervised learning approach named Self-Supervised Masking (SSM) for unsupervised anomaly detection and localization. SSM not only enhances the training of the inpainting network but also leads to great improvement in the efficiency of mask prediction at inference. We proposed a progressive mask refinement approach that progressively uncovers the normal regions and locates the anomalous regions. The proposed method outperforms several state-of-the-arts, achieving 98.3% AUC on Retinal-OCT (medical diagnosis) and 93.9% AUC on MVTec AD (industrial defect detection), respectively. |

|

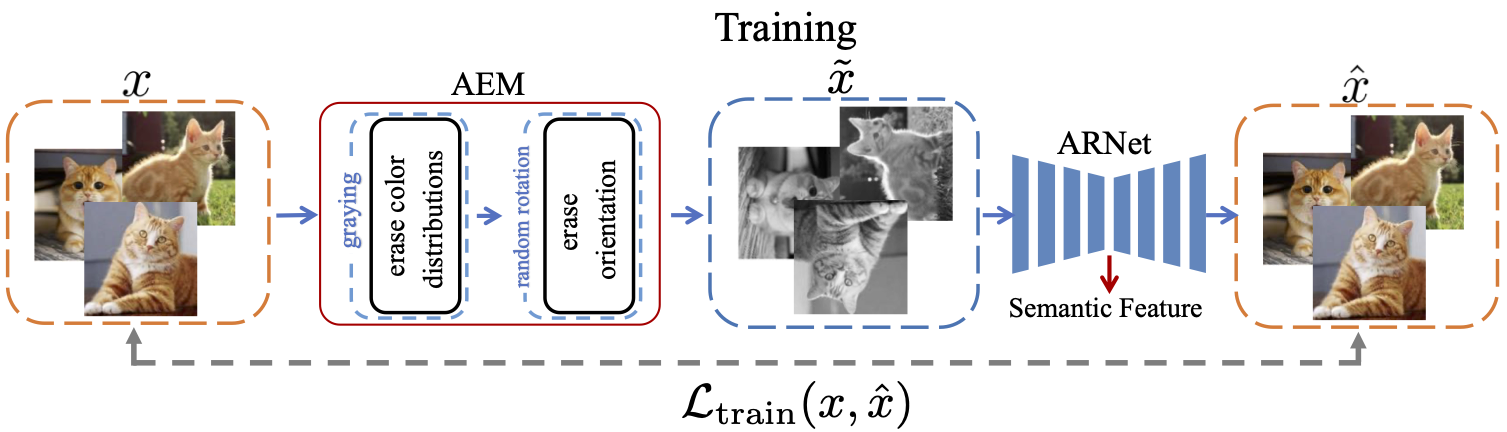

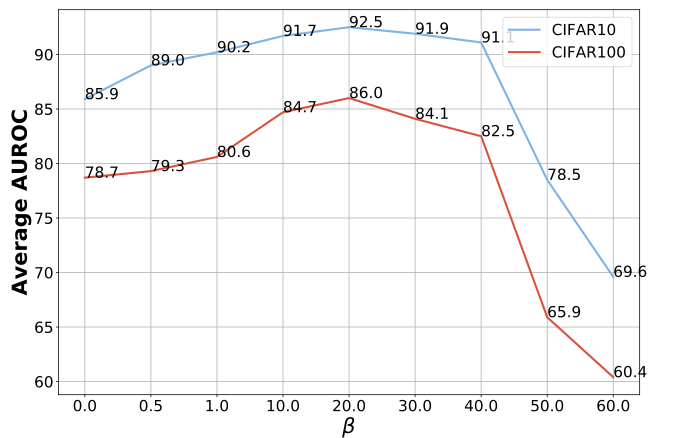

Fei Ye*, Chaoqin Huang*, Jinkun Cao, Maosen Li, Ya Zhang, Cewu Lu (* Equal Contribution) IEEE Transactions on Multimedia (TMM) 2022 (ESI Highly Cited Paper) We proposed to erase selected attributes from the original data and reformulate the anomaly detection task as a restoration task in the self-supervised learning paradigm, where the normal and the anomalous data are expected to be distinguishable based on restoration errors. By forcing the network to restore the original image, the semantic feature embeddings related to the erased attributes are learned by the network. The proposed method significantly outperforms several state-of-the-arts on multiple benchmark datasets, especially on ImageNet, increasing the AUC of the top-performing baseline by 10.1%. |

|

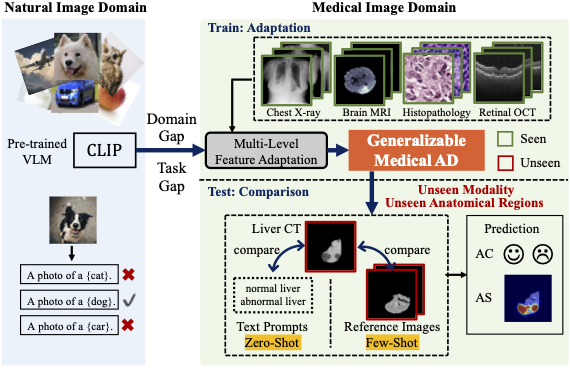

Chaoqin Huang*, Aofan Jiang*, Jinghao Feng, Ya Zhang, Xinchao Wang, Yanfeng Wang (* Equal Contribution) IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) 2024 (Highlight) code This paper introduces a lightweight multi-level adaptation and comparison framework to repurpose the CLIP model for medical anomaly detection. Our approach integrates multiple residual adapters into the pre-trained visual encoder, enabling a stepwise enhancement of visual features across different levels. The adapted features exhibit improved generalization across various medical data types, even in zero-shot scenarios where the model encounters unseen medical modalities and anatomical regions during training. |

|

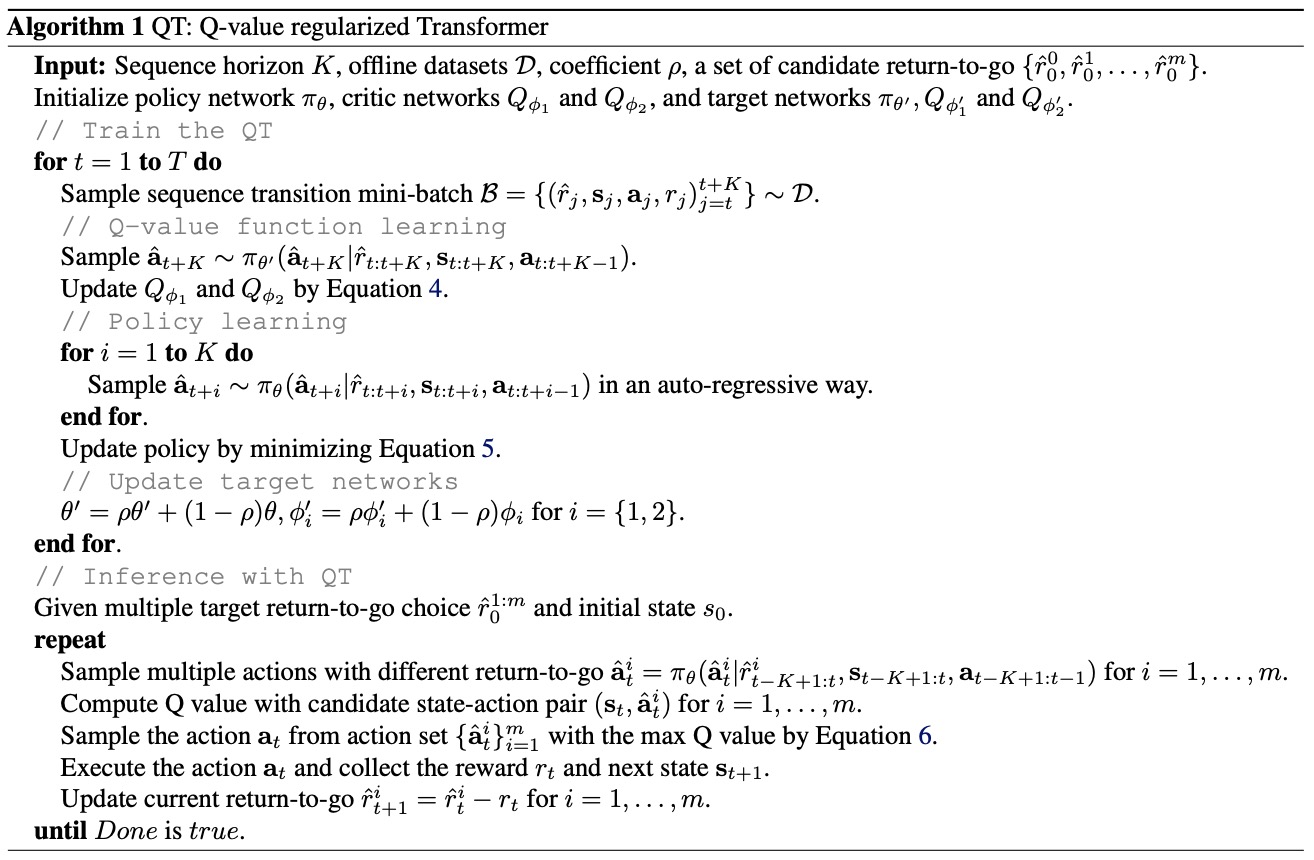

Shengchao Hu, Ziqing Fan, Chaoqin Huang*, Li Shen, Ya Zhang, Yanfeng Wang, Dacheng Tao International Conference on Mechine Learning (ICML) 2024 We proposed the Q-value regularized Transformer (QT), which combines the trajectory modeling ability of the Transformer with the predictability of optimal future returns from DP methods. QT learns an action-value function and integrates a term maximizing actionvalues into the training loss of CSM, which aims to seek optimal actions that align closely with the behavior policy. Empirical evaluations on D4RL benchmark datasets demonstrate the superiority of QT over traditional DP and CSM methods, highlighting the potential of QT to enhance the state-of-the-art in offline RL. |

|

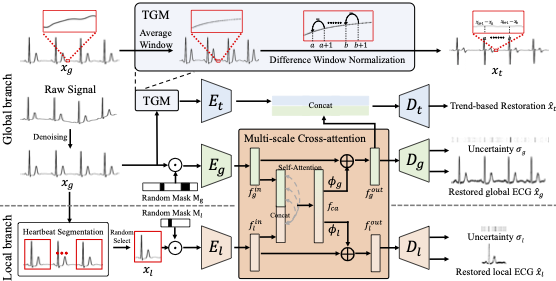

Aofan Jiang*, Chaoqin Huang*, Qing Cao, Shuang Wu, Zi Zeng, Kang Chen, Ya Zhang, Yanfeng Wang (* Equal Contribution) International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2023 (Early accepted) code Detecting anomalies in Electrocardiogram (ECG) data is particularly challenging due to the substantial inter-individual differences and the presence of anomalies in both global rhythm and local morphology. Imitating the diagnostic process followed by experienced cardiologists, we proposed a novel multi-scale cross-restoration framework for ECG anomaly detection and localization, achieving state-of-the-art performance on our proposed large-scale ECG benchmark and two other well-known ECG datasets. |

|

Chaoqin Huang, Haoyan Guan, Aofan Jiang, Ya Zhang, Michael Spratling, Yanfeng Wang European Conference on Computer Vision (ECCV) 2022 (Oral Presentation) code We considered few-shot anomaly detection (FSAD), a practical yet under-studied setting for anomaly detection, where only a limited number of normal images are provided for each category at training. Inspired by how humans detect anomalies, i.e., comparing an image in question to normal images, we leveraged registration, an image alignment task that is inherently generalizable across categories, as the proxy task, to train a category-agnostic anomaly detection model. During testing, the anomalies are identified by comparing the registered features of the test image and its corresponding support (normal) images. Experimental results have shown that the proposed method outperforms the state-of-the-art FSAD methods by 3%-8% in AUC on the MVTec and MPDD benchmarks. |

|

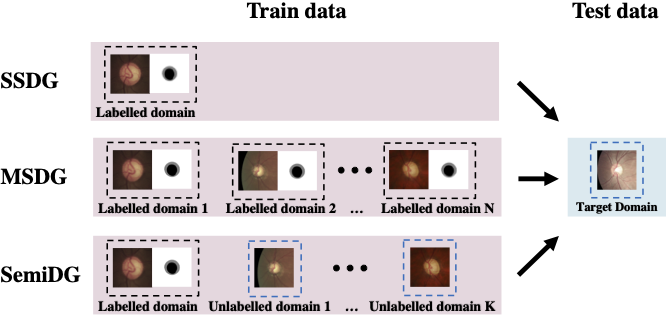

Ruipeng Zhang, Qinwei Xu, Chaoqin Huang, Ya Zhang, Yanfeng Wang IEEE International Symposium on Biomedical Imaging (ISBI) 2022 We introduced a general regularization-based semi-supervised domain generalization method, where the stability and orthogonality of the learned features are introduced as two regularization factors of the learning objective. Both regularization factors can be applied to both labeled and unlabelled data. |

|

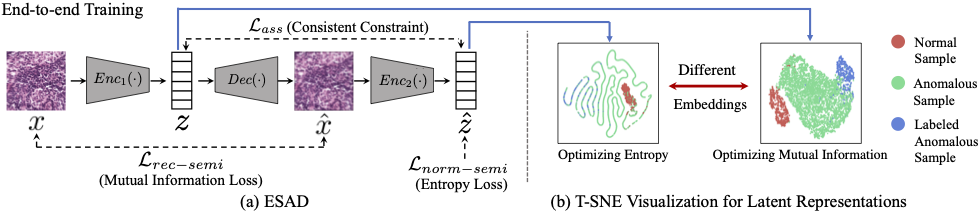

Chaoqin Huang, Fei Ye, Peisen Zhao, Ya Zhang, Yanfeng Wang, Qi Tian British Machine Vision Conference (BMVC) 2021 We proposed a new KL-divergence-based objective function and show that two factors: the mutual information, and the entropy, constitute an integral objective function for anomaly detection. Extensive experiments have revealed that the proposed method significantly outperforms several state-of-the-arts on multiple benchmark datasets, including medical diagnosis and several classic anomaly detection benchmarks. |

|

Fei Ye, Huangjie Zheng, Chaoqin Huang, Ya Zhang IEEE International Conference on Image Processing (ICIP) 2021 We proposed an objective function for anomaly detection with information theory, which maximizes the distance between normal and anomalous data in terms of the joint distribution of images and their representation. We managed to find its lower bound which weights the trade-off between mutual information and entropy, which leads to a novel information theoretic framework for unsupervised image anomaly detection. |

|

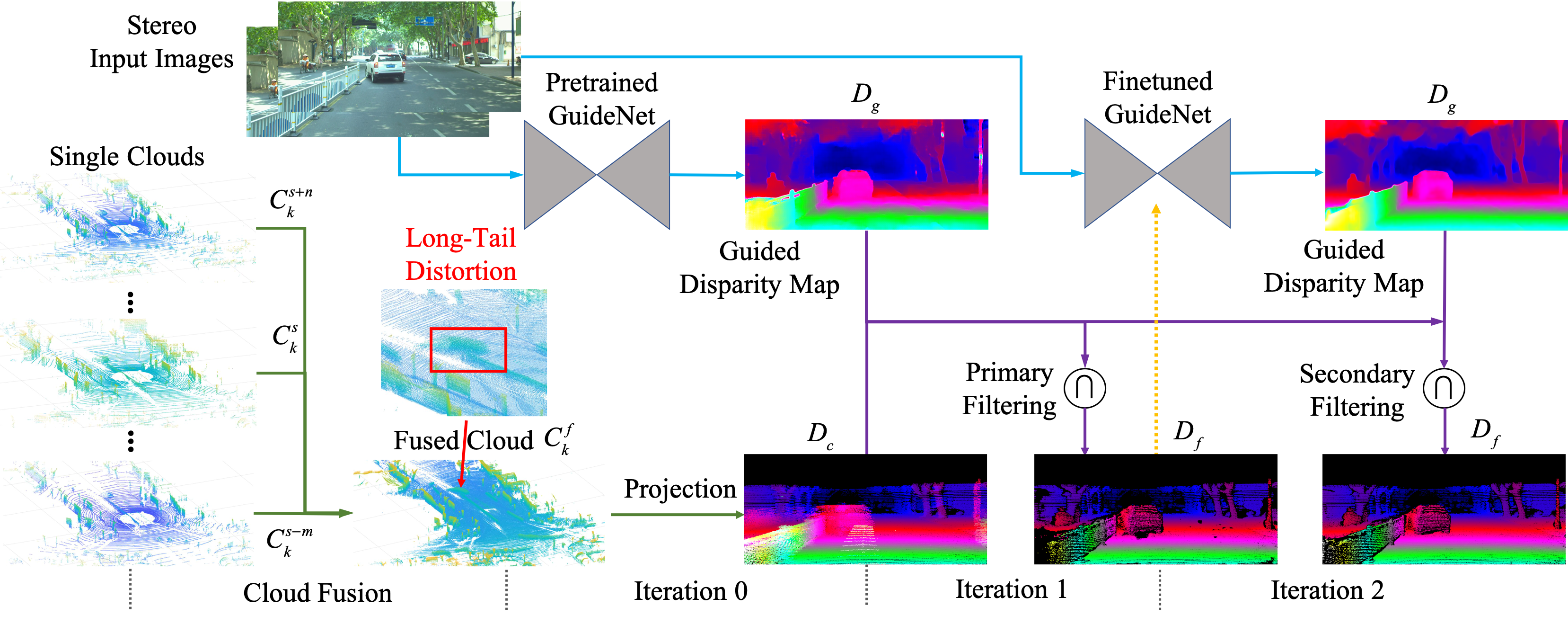

Guorun Yang, Xiao Song, Chaoqin Huang, Zhidong Deng, Jianping Shi, Bolei Zhou IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) 2019 We constructed a novel large-scale stereo dataset named DrivingStereo. It contains over 100k images covering a diverse set of driving scenarios. High-quality labels of disparity are produced by a model-guided filtering strategy from multi-frame LiDAR points. |

|

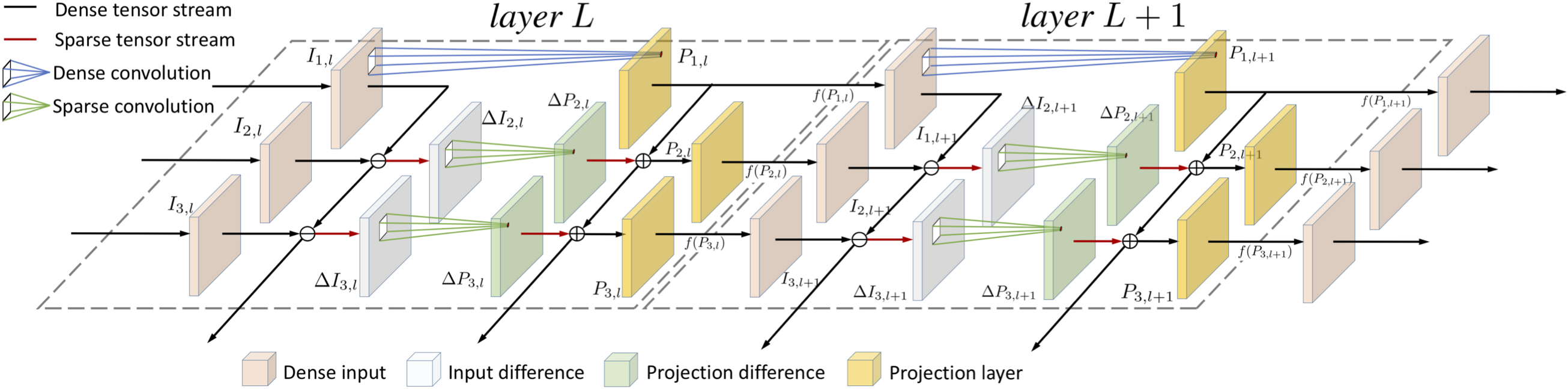

Bowen Pan, Wuwei Lin, Xiaolin Fang, Chaoqin Huang, Bolei Zhou, Cewu Lu IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) 2018 We proposed a framework called Recurrent Residual Module (RRM) to accelerate the CNN inference for video recognition tasks. This framework has a novel design of using the similarity of the intermediate feature maps of two consecutive frames, to largely reduce the redundant computation. |

|

Based on a template by Jon Barron.

|